Meaningless Intro

Now that I’ve gotten LaTeX on jekyll to work, and have a new website, I want to try to introduce the idea of pagerank, since it’s similar to ML in that the math required is similar.

Formulation

*NOTE: For the purposes of this blog, we will use “stochastic” to mean “row stochastic”. We don’t need to know about column/doubly stochastics.

We start off with the formulation of the problem. Introducing some variables:

$r(P_i)$ is the rank of the i-th page.

$\pi \in \mathbb{R}^n$ is the vector of all the ranks.

An optimal $\pi^*$ is one where each node has the same amount of rank increase as its rank distribution. Of course, this would not be possible if we didn’t cap the system at some maximal rank. Thus, we add the constraint that $\sum_i r(P_i) = 1$. If you think about it, this is really like solving a NxN matrix. You need n+1 constraints to solve n systems of equations, and this is that extra constraint.

In this model, we assume that the rank $r(P_i) = \sum_{P_j \in B_{P_i}} \frac{r(P_j)}{|P_j|}$, where $P_j$ is the j-th page. The cardinality of the page, $|P_j|$ is just the number of pages that $P_j$ points to. $B_{P_i}$ are all the pages that point to it.

There must exist at least 1 link from $P_j$ to $P_i$ if they’re neighbors. $H \in \mathbb{R}^{nxn}$ is the matrix of links, where $H_{ij}$ is the link from i to j.

We will set a link from i to any other node the same value, and sum to 1 row-wise. This is called row stochastic. If this is true, then we get the previous model stated is the value of 1 link from j.

Observations

Intuitively speaking, this means that

- the rank of $P_i$ is dependent on its neighbors’ ranks.

- for each neighbor $P_j$, if it points ONLY to $P_j$, that makes $P_j$ more important.

- the optimal answer is one where each node is happy with its influx and outflux. This, in dynamical systems, is called an equillibrium point.

The issue though, is we are not given $r(P_i)$’s. Afterall, if we had them, then we could just rank them. We start off with blind guesses, assigning nonzero rank to each of them:

\(r_i^0 = \frac{1}{N} \forall i \in [N]\).

There is, however, one thing that makes the “optimal” ranks unique:

Lemma 1 : \(\pi^* = H \pi^*\).

What does this mean? It means that this linear transformation $H$, as a function, maps $\pi^*$ to itself. Thus, $\pi^*$ is called a fixed point in optimizations. This is similar to the idea that at a max/min, the derivative, or change, is 0.

Extending from Lemma 1, we have that:

$(I - H)\pi^* = 0$. Doesn’t this look like an eigenvalue problem? Yeah that’s right. We find the greatest eigenvalue here so that the stability is the greatest.

Aside: Power Iteration

When you learn about eigenvalues, you always learn the worst way to find them. The stuff I learned in college was: “blah gaussian elimination blah solve for system equations blah blah 3x3s are hard”. In this case, if we got a matrix greater than 3x3, what you gon’ do?!

However, not all is lost. I also learned, without much context, that a diagonalizable matrix can be decomposed into:

$A = QLQ^T$ where $L$ is the eigenvalue matrix. SVD is a method similar to this, except it’s $USV^T$, and instead of orthogonal matrices, it’s unitary, and instead of eigenvalues, it’s singular values. Same idea I apply here applies to SVD, and is how you would actually do this.

Now, we know the trick that $AAAAA… = QLQ^TQLQ^TQLQ^T… = QL^kQ^T$.

Then, take a random vector $v = Qu$, and do $AAAAAA…v$. We get that the above is equal to: $QL^Tu = \sum_{j=1}^N q_j\lambda_j^ku_j$.

Pull out a constant factor of $\lambda_{max}$. We then get: $\lambda_{max}^k \sum_{j=1}^N q_j\frac{\lambda_j}{\lambda_{max}}^k u_j$.

If $\lambda_k = \lambda_{max}$, then we are screwed. Otherwise, we’re good, because that fraction will go to 0, and we acquire $\lambda_{max}^k$.

This is what we’re gonna use to find the biggest eigenvalue, not some dumb gaussian elimination thing. We’re engineers, what are digits of precision?

Big O - How to googly scale

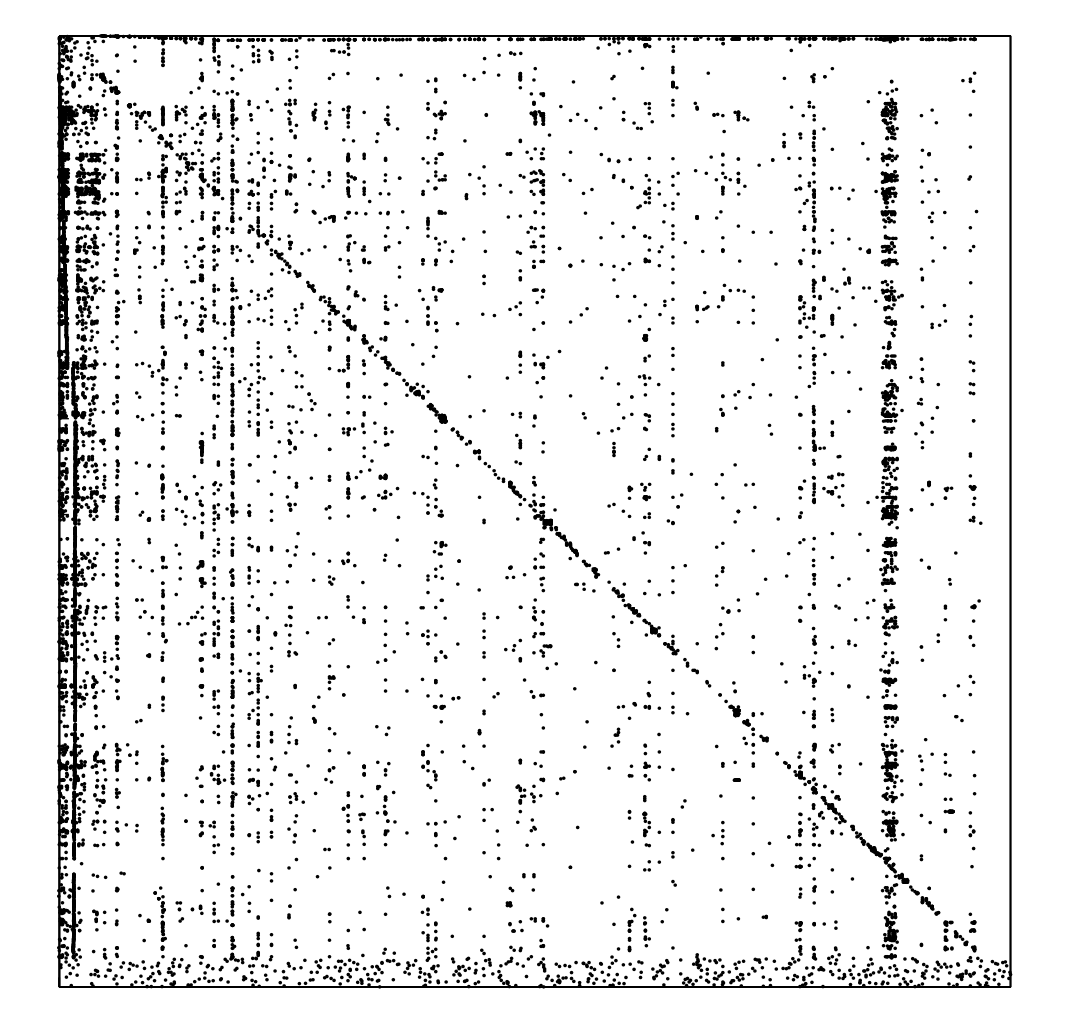

We start off this section by making the observation that the matrix defined above is really the adjacency matrix of the directed graph of webpages.

If this graph was fully dense, i.e. fully connected, then we would have N^2 edges. This corresponds to all values in the matrix $\neq 0$.

However, it’s not in practice. Every website has a billion links to all other websites? I don’t think so. It’s more likely that cat videos will lead to more cat videos. This is how you get stuck, and probably where the term “rabbit hole” came from…

So, if we assume every node has at most $K$ edges, and $K$ is small, then we can say something about this:

- Matrix-vector multiplication on $H \pi$ takes $O(N^2)$ if fully dense.

- With this restriction, it becomes $O(KN)$. If K is considered a constant, then this is just linear.

Problems with iterations

We need a very strict set of guarantees on the matrix $H$ before we can say anything:

- Must be stochastic[not substochastic], meaning there’s no nodes that don’t point to anything.

- Eventually, all the ranking will be accumulated at those greedy nodes.

- All configurations must be able to be “almost surely” visited.

- One can interpret $H$ to be a transition probability matrix for Markov chains. Remind you of MDP’s by any chance?

- Must converge to a unique point. This means the maximal eigenvalue must be unique.

- Must have a bound on iterative convergence.

- How many digits of precision for N iterations?

So we need these following traits for these things above to have reasonable bounds:

row-stochastic: All rows of a matrix sum to 1. Hence, it’s a well formed multinomial probability distribution.

An example of a row-stochastic matrix is:

$\begin{bmatrix}0&0&1\\0.5&0.5&0\\0&1&0\end{bmatrix}$

In the example above, we have that page 1 has a link to page 3, page 2 has a link to page 1 and itself, and page 3 has a link to page 2.

irreducible: The graph is a giant strongly connected component.

aperiodic: self explanatory.

dense: Not sparse anymore, but this can be done via a rank 1 update.

We come up with the random surfer teleportation skill to make all of these true:

- When surfer gets to a page with no links, he decides to teleport to anywhere with probability $\frac{1}{N}$.

- $G = H + \frac{1}{N}ae^T$ where $a_i$ is 1 if that row is not stochastic.

- Surfer can visit the same webpage twice in a row to get rid of periodicity, and surfer can visit any webpage randomly as a teleportation skill to induce density.

- $G = \alpha(H + \frac{1}{N}ae^T) + (1-\alpha) (\frac{1}{N}ee^T)$

- $G = \alpha H + \frac{1}{N}(\alpha a + (1-\alpha) e) e^T$

The above is just a rank 1 update. Sparsity on H means that’s easy, and the right side is just a rank 1.

Parameters

- $\alpha$ is usually 0.85. This is directly related to the stability of the matrix $G$, called the google matrix.

- Some ways to measure stability is taking derivative w.r.t $\alpha$, or bounding the contraction of the linear operator $G$.

- Some customization can be done on the teleportation matrix, by changing $\frac{1}{N}ee^T$ to $ev^T$ for some $v$. This corresponds to a multinomial prior on the document likelihood.

- Can tune a bayesian machine learning model to fit this based off of user behavior.

Ending thoughts

If anyone is interested, I’ll maybe continue this series. However, this is a blog post, not a book, so go and find some pdf’s out there that explain it better :)

Credits

Appropriate credits to Langville et.al.i “Google’s Pagerank and Beyond”, wikipedia, Durett et.al. “Probability: Theory and Examples”